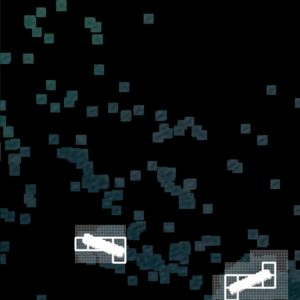

While reading about image processing challenges, something I kept reading about was SLAM: Simultaneous Location and Mapping. The goal is to “map of an unknown environment while simultaneously keeping track of an agent’s location within it,” from Wikipedia. These projects are regularly done with a depth sensing camera, so I purchased a Kinect for Xbox One, and loaded up the SDK.

After reading the SDK and setting up CMAKE, I recorded a video of my apartment while pushing around the Kinect V2. I recorded the color and depth video to then generate a map of my apartment.

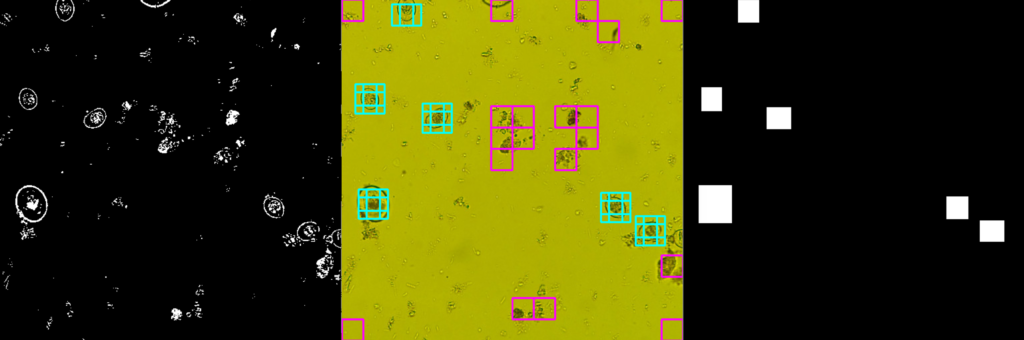

My project reads the depth video 1 frame at a time. All features are logged and looked for in the next frame. If a feature is determined to be the same as the previous frame, then the feature is not updated on the map. If a feature appears to be new, then it is drawn on the map based on triangulation of features that are in the current and previous frame. To be more specific, a feature has a minimum size and features near the edge are not carried over multiple frames due to not being able to see the edge, which would provide inaccurate triangulation.

There are limitations to this style of SLAM. An obvious limitation is that features are not allowed to be ‘behind’ other features in a single frame. For example a wall behind a desk leg. This is intentional to keep version 1 simple by limiting each column to have only 1 pixel. This causes an array of issues, but still allows for a good version 1 in tracking features across frames. Lack of FOV and other issues will be resolved in v2.

My code is at my GitHub